Aims and objectives:

- Be able to describe three types of evaluation.

- How to create a logic model.

- What to consider when planning an evaluation.

Introduction to evaluation

What is evaluation?

Evaluation is any systematic process to judge merit, worth or significance by combining evidence and values (Better Evaluation, 2022).

Why do an evaluation?

We have limited resources available for healthcare and decisions must be made when allocating these limited resources for different services or technologies. Having an explicit, science-based criteria for decision-making is important since it improves accountability and decision-makers need some metrics to ensure optimum decisions are being made.

Delivering an evaluation is often a requirement of funding when implementing a new programme. However, the benefits of developing an evaluation strategy extend far beyond one evaluation. By co-creating a logic model and establishing clear data flows for an evaluation, the programme team can rapidly identify where they can access data, have data readily available for reporting and track the progress of their programme to short, medium and long-term outcomes.

When can you do an evaluation?

Learning for delivery: Early on in a programme, you can use evaluation to determine what has been working well and what has not been working so well, to make informed changes and improve outcomes as you go.

Assessing outcomes: At the end of a programme, you can use evaluation to determine if your programme is having a positive impact for patients, and whether the programme should continue. At this stage you could also look at resource utilisation as a result of the programme, for example reduction in A&E attendances.

Learning for elsewhere: If a programme has been successful in one GP practice, it could spread and be scaled up. Evaluation is a core activity in supporting technologies to grow across the NHS as lessons learned from other locations are key in developing sustainable strategies for implementation.

Types of Evaluation

Process evaluation

- Assesses whether a programme has been implemented as intended

- Can be used to establish why a tool would be useful in some populations and not others

Impact evaluation

- Assesses whether a programme has been successful in achieving its intended outcomes (as per the logic model) and unintended outcomes

Economic evaluation

- Determines whether a programme was best use of resources

You may also consider conducting an economic evaluation to understand the cost-effectiveness of the technology you are interested in. Depending on the purpose of your evaluation, different types of economic evaluations might be appropriate. Usually, we would like to know how much it would cost to deliver one additional unit of health gain. In a cost-effectiveness analysis, different health technologies or healthcare interventions are compared to understand whether one of them is more/less cost-effective than the others. In some cases, the alternative might be doing nothing, and this has some consequences that need to be considered. It is not always sufficient to just demonstrate the cost-effectiveness alone since local decision-makers would need to know the impact of providing a specific service on the available budget. To estimate this, a budget impact analysis needs to be conducted.

Undertaking an economic evaluation might not always be feasible but it is often possible to find published economic evaluations of similar technologies to gain an understanding of potential health and cost implications.

There are also terms that cut across these types of evaluation, such as:

Formative evaluation: Used to make improvements

Summative evaluation: Used to make a decision whether to continue with the programme or tool (start/stop)

View more information and examples of types of evaluation.

How do I do an evaluation?

Planning and logic models

Evaluation should be embedded at the start of the programme, to ensure data and metrics are available to make informed decisions.

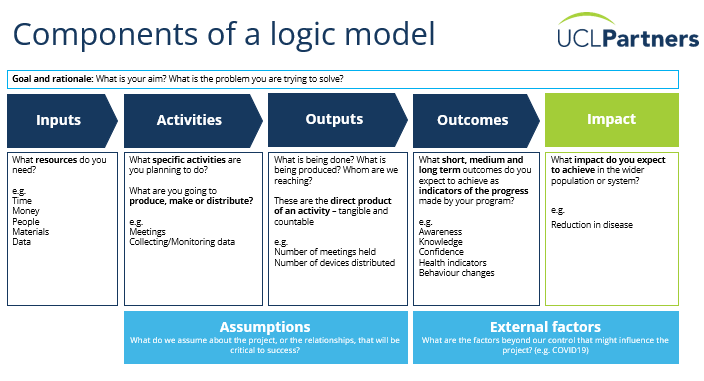

One method of doing this is by creating a logic model. A logic model is a road map that describes the shared relationships between your inputs, outputs, outcomes and impact of your programme. Logic models should be created in collaboration with stakeholders, to create shared goals and understanding of the intended impacts of the programme and how you plan to achieve them. In creating a logic model at the start of a programme, you are also able to clearly identify how certain aspects of the programme can be evaluated and can serve as a touchpoint throughout the programme to check if you are on the way to achieving your intended impacts.

A template, such as below, can be used to create your logic model – more templates are available.

What to consider when designing your evaluation:

- What would you like to know? Describing the objectives of your evaluation will help determine what kind of evaluation you should perform and when you should begin.

- What is already available? Undertake an evidence review to see how others have approached similar evaluations and check if there are validated tools (e.g. surveys, patient reported outcome measures) which you could use. The FutureNHS collaboration platform and Fab NHS Stuff publish examples of case studies and evaluation reports. Networks such as AnalystX (on FutureNHS) can also be a useful source of examples, training and opportunities for collaboration.

- What resources can you commit to this evaluation? Time and space should be made available to support an evaluation and should be an important consideration when applying for funding. The resources you can commit can also help determine the scale of the evaluation.

- At what scale do you want to evaluate? You may want to focus on depth (i.e. detailed evaluation of a single site or aspect) or breadth (i.e. a wide ranging evaluation covering multiple locations or populations).

- What information should you collect? Planning what information you need to support your evaluation is essential to ensure timely collection and analysis. The logic model can be used as a framework to build a data strategy, where you detail when and how you plan to collect and analyse the data (both qualitative and quantitative) required. This is particularly important if you are planning to use patient data in the evaluation, as you will have to assess what information governance measures are required to access and manage the data safely. Your local information governance lead should be a key contact at this stage in the programme, and you may have to complete a Data Protection Impact Assessment (DPIA) which will help you determine what steps you need to complete to access data safely for your evaluation.

- Who would you like to involve in your evaluation? You should map and involve key stakeholders who should influence the design of the evaluation. You may also want to gather insights from staff and patients who are using or benefitting from the tool as part of data collection. Informed consent should be collected from participants in evaluation. For more information on consent and templates, please refer to these examples from the NHS Health Research Authority.

- How can you minimise bias in your approach? Consider if your programme will require independent evaluation, to avoid the biases in “marking your own work”. Your local AHSN (Academic Health Science Network) can support evaluations.

Frameworks

There are a frameworks and toolkits available which you could use to evaluate your tool or programme:

- Non-adoption, abandonment, scale-up, spread, and sustainability (NASSS) framework

- Better Evaluation Rainbow Framework

- Evaluation Works toolkit

Evaluation Training

Training in evaluation can be accessed free of charge through the NIHR Applied Research Collaboration North Thames Academy. They deliver courses in evaluation throughout the year aimed at staff in frontline services in the NHS, local government and social care organisations. They also regularly publish resources that can be accessed in your own time.

Further reading

- Evaluation: what to consider

- Midlands DSN Guide to Evaluation Design, Principles and Practice

- Get started: evaluating digital health products

- How to Value Digital Health Interventions? A Systematic Literature Review

- NICE health technology assessment guidelines

- NICE economic evaluation guidelines for public health interventions